- Гідрологія і Гідрометрія

- Господарське право

- Економіка будівництва

- Економіка природокористування

- Економічна теорія

- Земельне право

- Історія України

- Кримінально виконавче право

- Медична радіологія

- Методи аналізу

- Міжнародне приватне право

- Міжнародний маркетинг

- Основи екології

- Предмет Політологія

- Соціальне страхування

- Технічні засоби організації дорожнього руху

- Товарознавство продовольчих товарів

Тлумачний словник

Авто

Автоматизація

Архітектура

Астрономія

Аудит

Біологія

Будівництво

Бухгалтерія

Винахідництво

Виробництво

Військова справа

Генетика

Географія

Геологія

Господарство

Держава

Дім

Екологія

Економетрика

Економіка

Електроніка

Журналістика та ЗМІ

Зв'язок

Іноземні мови

Інформатика

Історія

Комп'ютери

Креслення

Кулінарія

Культура

Лексикологія

Література

Логіка

Маркетинг

Математика

Машинобудування

Медицина

Менеджмент

Метали і Зварювання

Механіка

Мистецтво

Музика

Населення

Освіта

Охорона безпеки життя

Охорона Праці

Педагогіка

Політика

Право

Програмування

Промисловість

Психологія

Радіо

Регилия

Соціологія

Спорт

Стандартизація

Технології

Торгівля

Туризм

Фізика

Фізіологія

Філософія

Фінанси

Хімія

Юриспунденкция

Module control work №1

on the "Basic theory of communication"

(2012/2013 teach. year)

Ticket 9

1. Optimal linear filtering.

2. Information characteristics of sources, messages and communication channels.

3. Units of information.

Done:

Student of IAN RS 309

Surnikov Konstantin

3. Units of information.

The byte (pron.: /ˈbaɪt/) is a unit of digital information in computing and telecommunications that most commonly consists of eight bits. Historically, a byte was the number of bits used to encode a single character of text in a computer and for this reason it is the basic addressable element in many computer architectures. The size of the byte has historically been hardware dependent and no definitive standards existed that mandated the size. The de facto standard of eight bits is a convenient power of two permitting the values 0 through 255 for one byte. With ISO/IEC 80000-13, this common meaning was codified in a formal standard. Many types of applications use variables representable in eight or fewer bits, and processor designers optimize for this common usage. The popularity of major commercial computing architectures have aided in the ubiquitous acceptance of the 8-bit size.

The term octet was defined to explicitly denote a sequence of 8 bits because of the ambiguity associated at the time with the term byte.

The unit symbol for the byte is specified in IEC80000-13, IEEE 1541 and the Metric Interchange Format as the upper-case character B.

In the International System of Units (SI), B is the symbol of the bel, a unit of logarithmic power ratios named after Alexander Graham Bell. The usage of B for byte therefore conflicts with this definition. It is also not consistent with the SI convention that only units named after persons should be capitalized. However, there is little danger of confusion because the bel is a rarely used unit. It is used primarily in its decadic fraction, the decibel (dB), for signal strength and sound pressure level measurements, while a unit for one tenth of a byte, i.e. the decibyte, is never used.

The unit symbol kB is commonly used for kilobyte, but may be confused with the still often-used abbreviation of kb for kilobit. IEEE 1541 specifies the lower case character b as the symbol for bit; however, ISO/IEC 80000-13 and Metric-Interchange-Format specify the abbreviation bit (e.g., Mbit for megabit) for the symbol, providing disambiguation from B for byte.

The lowercase letter o for octet is defined as the symbol for octet in IEC 80000-13 and is commonly used in several non-English languages (e.g.,French and Romanian), and is also used with metric prefixes (for example, ko and Mo)

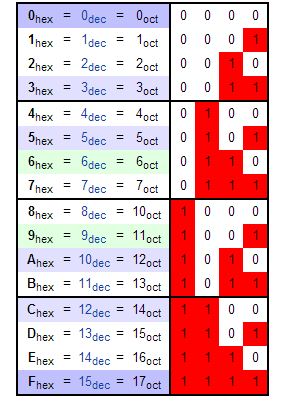

In computing, a nibble (often nybble or even nyble to match the vowels of byte) is a four-bit aggregation, or half an octet. As a nibble contains 4 bits, there are sixteen (24) possible values, so a nibble corresponds to a single hexadecimal digit (thus, it is often referred to as a "hex digit" or "hexit").

A full byte (octet) is represented by two hexadecimal digits; therefore, it is common to display a byte of information as two nibbles. The nibble is often called a "semioctet" or a "quartet" in a networking or telecommunication context. Sometimes the set of all 256 byte values is represented as a table16×16, which gives easily readable hexadecimal codes for each value.

The sixteen nibbles and their equivalents in other numeral systems:

ne early recorded use of the term "nybble" was in 1977 within the consumer-banking technology group at Citibank that created a pre-ISO 8583 standard for transactional messages, between cash machines and Citibank's data centers, in which a NABBLE was the basic informational unit.

The term "nibble" originates from the fact that the term "byte" is a homophone of the English word "bite". A nibble is a small bite, which in this context is construed as "half a bite". The alternative spelling "nybble" parallels the spelling of "byte", as noted in editorials in Kilobaud and Byte in the early 1980s.[citation needed]

The nibble is used to describe the amount of memory used to store a digit of a number stored in packed decimal format within an IBM mainframe. This technique is used to make computations faster and debugging easier. An 8-bit byte is split in half and each nibble is used to store one digit. The last nibble of the variable is reserved for the sign. Thus a variable which can store up to nine digits would be "packed" into 5 bytes. Ease of debugging resulted from the numbers being readable in a hex dump where two hex numbers are used to represent the value of a byte, as 16×16 = 28.

Historically, there have been cases where the term "nybble" was used for a set of bits fewer than 8, but not necessarily 4. In the Apple II microcomputer line, much of the disk drive control was implemented in software. Writing data to a disk was done by converting 256-byte pages into sets of 5-bit or, later, 6-bit nibbles; loading data from the disk required the reverse. Note that the termbyte also had this ambiguity; at one time, byte meant a set of bits but not necessarily 8. Today, the terms "byte" and "nibble" generally refer to 8- and 4-bit collections, respectively, and are not often used for other sizes. The term "semi-nibble" is used to refer to a 2-bit collection, or half a nibble.

(Binary to Hexadecimal)

| 0100 0010 = 42 |

| 0010 0000 1001 = 209 |

| 0001 0100 1001 = 149 |

| 0011 1001 0110 = 396 |

| 0001 0000 0001 = 101 |

| 0011 0101 0100 = 354 |

| 0001 0110 0100 = 164 |

2. Information characteristics of sources, messages and communication channels.

One of the basic tasks of the theory of the information transmitting consists in development of methods of calculation of these characteristics.

Let's consider features of the decision of this task for a case of the discrete messages transmitting as more simple.

Let's designate volume of the alphabet A of the discrete messages source through m. Let's remind, that as the alphabet was named symbols set (final set), which uses for formation of the messages. Let's assume, that each message includes of n-symbols. Let's show, as determine the information quantity in the messages of such source.

It would be possible to use common number

N0 = m n(2.1)

of the messages, length n, as the information characteristic of the messages source, but it is inconvenient because of indicative dependence N0 from n.

In 1928 R. Hartley has offered to take logarithm from this dependence and to use a logarithmic measure of the information quantity

I=log N0 = n log m. (2.2)

The formula (10.2) does not reflect random character of the messages formation. To remove this lack, it is necessary to connect the information quantity with probabilities of occurrence of symbols. This task in 1946 has decided C.Shannon. The decision is executed so. If the probabilities of occurrence of all symbols of the alphabet are identical, the information quantity, which transfers one symbol, I1= logm. Probability of occurrence of symbols р = 1/m, hence, m =1/р. Having substituted this meaning in the formula for I1 we shall receive I1 = log р. The received parity already connects the information quantity, which transfers one symbol, and probability of occurrence of this symbol. In the real messages the symbols have different probability. Let's designate through p(ai) probability of occurrence in the message of a symbol ai, aiÎ A. Then the information quantity, which transfers this symbol, Ii = log p(ai).

The source entropy as the average information quantity, which contents one symbol of the messages source, we shall receive, applying operation of averaging on all volume of the alphabet:

, bit/symb.

, bit/symb.

Conditional source entropy.

To take into account statistical (correlation) connections between symbols of the messages, they have proposed concept of conditional entropy

, (2.3)

, (2.3)

where  is probability of occurrence a'j provided that before it has appeared аi.

is probability of occurrence a'j provided that before it has appeared аi.

Conditional entropy is an average information quantity, which transfers one symbol of the messages provided that there are correlation connections between anyone by two next symbols of the message. Because of correlation connections between symbols and not of their equiprobable occurrence in the real messages the information quantity of decreases which transfers one symbol. Quantitatively these losses of the information characterize in redundancy factor

, (2.4)

, (2.4)

where Н1 - the information quantity, which transfers one symbol in the real messages,

Н2, - maximum the information quantity, which can transfers one симв. For the European languages redundancy of the messages  .

.

| <== попередня сторінка | | | наступна сторінка ==> |

| Камеры сгорания ГТД | | | The information characteristics of sources and channels |

|

Не знайшли потрібну інформацію? Скористайтесь пошуком google: |

© studopedia.com.ua При використанні або копіюванні матеріалів пряме посилання на сайт обов'язкове. |